Everyone finds a professional camera and photography appealing. We usually focus on the theoretical rules for a nice frame, the art of storytelling, etc. But if you have a creative mind, it comes with a lot of inquisitiveness which we usually ignore in favour of concentrating on something more worthwhile. Simply put, we get inspired by our favourite photographers, imitate their aesthetics, and curate some excellent images using techniques that are similar to theirs. However, by merely using it as we have been instructed, we are limiting ourselves. A camera is capable of much more, but only if we understand how it all works.

Table of Contents

Meaning of word : Photography

The word Photography simply means drawing with light, derived from Greek word “photo” meaning light, and “graph” meaning to draw.

It can be defined as a practice of creating durable images by recording photons (light) either electronically or chemically with the help of a light-sensitive material(something which reacts to photons in a certain way).

Basic science which makes photography possible

Nowadays, asking oneself how we may capture our surroundings in some simple digital form is just as mportant as questioning the reason of existence of life. Nobody can dispute that we have all given it some thought. Although no one has a rational explanation for this, but photography is a real-life miracle carried out solely by humans, therefore we are aware of the science involved in how it functions.

If you are familiar with the dual nature of light, you are aware of how photons behave both as tiny packets of light and as rays. When a photon is reflected from an item and travels to our eyes, the lens in our eyes refracts and converge the light and focuses it on our retina, allowing us to see the object if the wavelength falls within the visual spectrum. Similar things also occur in the case of cameras. Modern photography uses a lens to focus the reflected photons (light) onto a photosensitive surface or an electronic sensor, creating a virtual image of the region or object whose light has been reflected.

Three steps to create an image

- In this article we will mostly keep modern day photography in focus. This includes three main stages to create an image:

- Using a lens to converge photons

- Capturing photons onto the image sensor

- Transfer the data to convert it into digital form

1. Using a Photography lens

A photographic lens is used to converge the light rays in such a way that it refracts all the photons onto a camera sensor using a series of concave and convex lenses of variable focal lengths. It depends upon the focal length how much area would be covered in a scene, the longer the focal length, the higher the magnification. For example, a lens with a 35mm focal length will provide you with a much wider view than that of a lens of a focal length of 100mm.

There are lenses with variable focal lengths that provide optical magnification, allowing you to zoom into the scene without degrading the quality if you digitally zoom in or crop your image subsequently.

The amount of light can be controlled with the help of aperture, which simply is like a threshold (opening) of a lens which dilates (widens) or contracts depending on the needs. It imitates the iris of the human eye. If more light is needed we can open it wider and; if less light is needed, it can go much shallower to allow less amount of light to enter. This is one of the three ways we can control the brightness of image.

Other than this, Aperture also helps to controll the depth of field which is used to separate subject from background i.e making it more blurry or keeping it in focus and clearer to see.

2. Functioning of a camera sensor

Remember photoelelctric effect ? The famous experiment for which Einstien won the Nobel prize. We’ve all studied this in our high school textbooks. Its time to revise that becauase the newer CMOS sensors, which are primarily used in modern cameras, employ the same procedure.

"We cannot solve our problems with the same thinking we used when we created it"

Albert Einstien

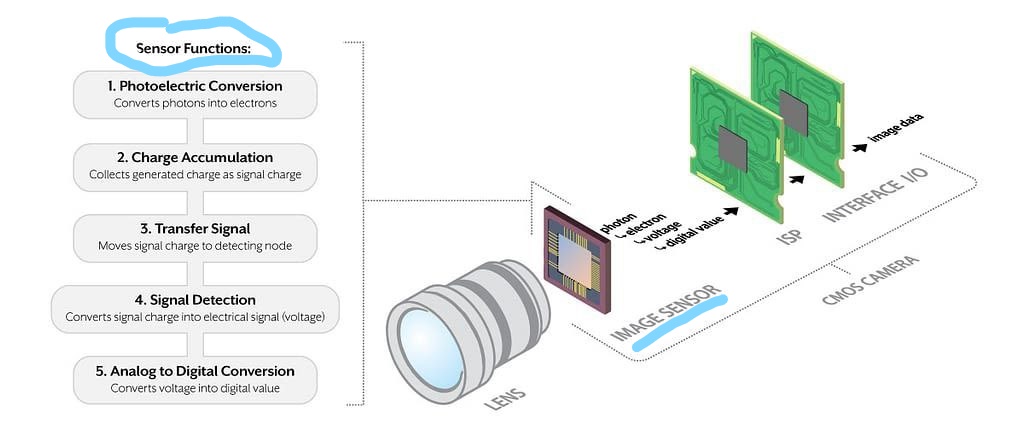

There are various types of sensors but purpose of any image sensor remains the same, to convert incoming photons into electrical signals that can be encoded in the next stagewhere an image can be viewed or stored. These are the most important component of a camera and these are the reason for the higher cost we pay for a good camera.

A camera sensor is a photosensitive surface that converts the energy of photons into electrons, which are further passed on by being attracted to positive energy. These electrons are then converted into analog signals then travel to a point where they are encoded and amplified into digital signals in order to be displayed as a storable picture.

There are two major types of sensors typically used in any type of camera: CMOS (complementary metal oxide semiconductor) and CCD (Charged coupled device) sensor. Almost all modern-day cameras use a CMOS sensor as the CCD sensor is a bit outdated now and comes with many limitations.

Photons are captured using photosites and converted using pixels present on the surface of a camera sensor which comprises capacitors and amplifiers, thus, photons are captured and converted into a voltage simultaneously inside a pixel only. Bigger pixels can capture more photons meaning more light.

Any digital camera sensor consists of three components layered above the pixels:

1. Color filters – provides a color-blind sensor the ability to respond to various colors of light

2. Microlenses – Tiny lenses focus incoming light particularly onto the photosensitive area of photosites.

3, Protective layer – Prevents the sensor from certain frequencies of light which can damage the sensor

3. Converting data into digital form (image)

All the data converted from photons to electrons and then to voltage is transferred in analogue signals, the last step requires converting the metadata from analog to digital form which is done using an analogue to digital converter (ADC). The camera’s software reads the data provided; analyses the various dynamic ranges and colours and maps them so that the result is displayed as an image.

- Facebook

- Twitter

- Linkedin

- Whatsapp

Awesome content

Hello! thank you for your comment and appreciation.

Informative

Wow , always wondered about it … very informative !

Pingback:Understanding Exposure Triangle - Inside The Camera

Pingback:Understanding Exposure Triangle - Copy - Inside The Camera

Pingback:Understanding Exposure Triangle in photography - Inside The Camera

I’ve been browsing online more than 3 hours today, yet I never found any interesting article like yours.

It is pretty worth enough for me. In my view,

if all site owners and bloggers made good content as you

did, the web will be much more useful than ever before.